Alibaba

July 31, 2025

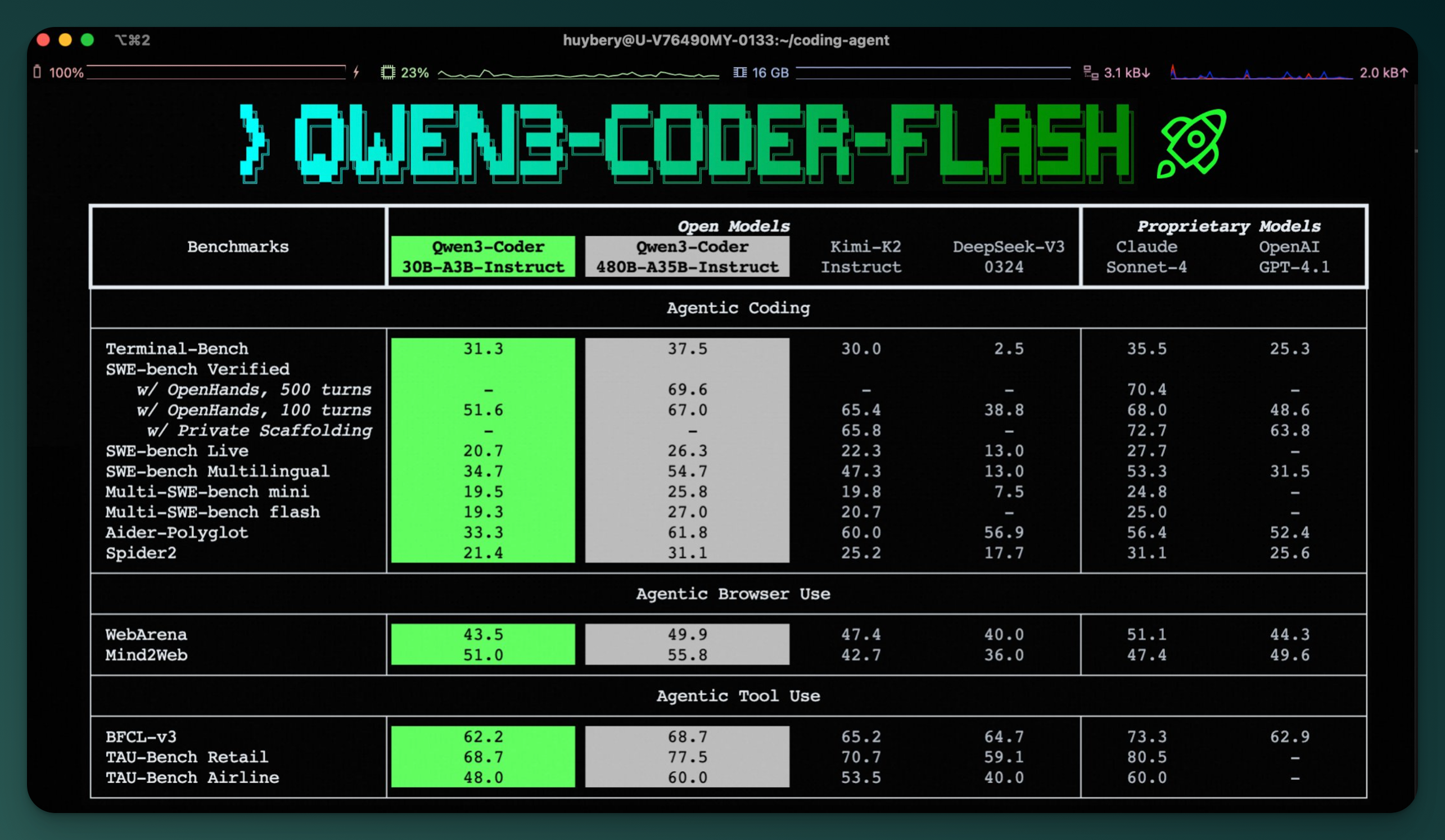

Alibaba Shrinks Its Coding AI to Run Locally

Qwen3-Coder-Flash is a compact, 30B MoE model capable of local inference on modern MacBooks. It joins Alibaba’s broader Qwen3 ecosystem as a nimble counterpart to the heavyweight 480B hosted version, giving developers a pragmatic hybrid setup for coding workflows.

July 29, 2025

Alibaba Drops Qwen3‑30B Instruct Models With Local Deployment in Mind

Alibaba has released two new open-source MoE models under its Qwen3 series, including an FP8 quantized variant. The 30B parameter architecture activates only 3B parameters per forward pass, enabling high performance with reduced hardware demand. With 128K context support and strong benchmarks in reasoning and code, these models are primed for local use cases on Apple Silicon Macs and beyond.

July 22, 2025

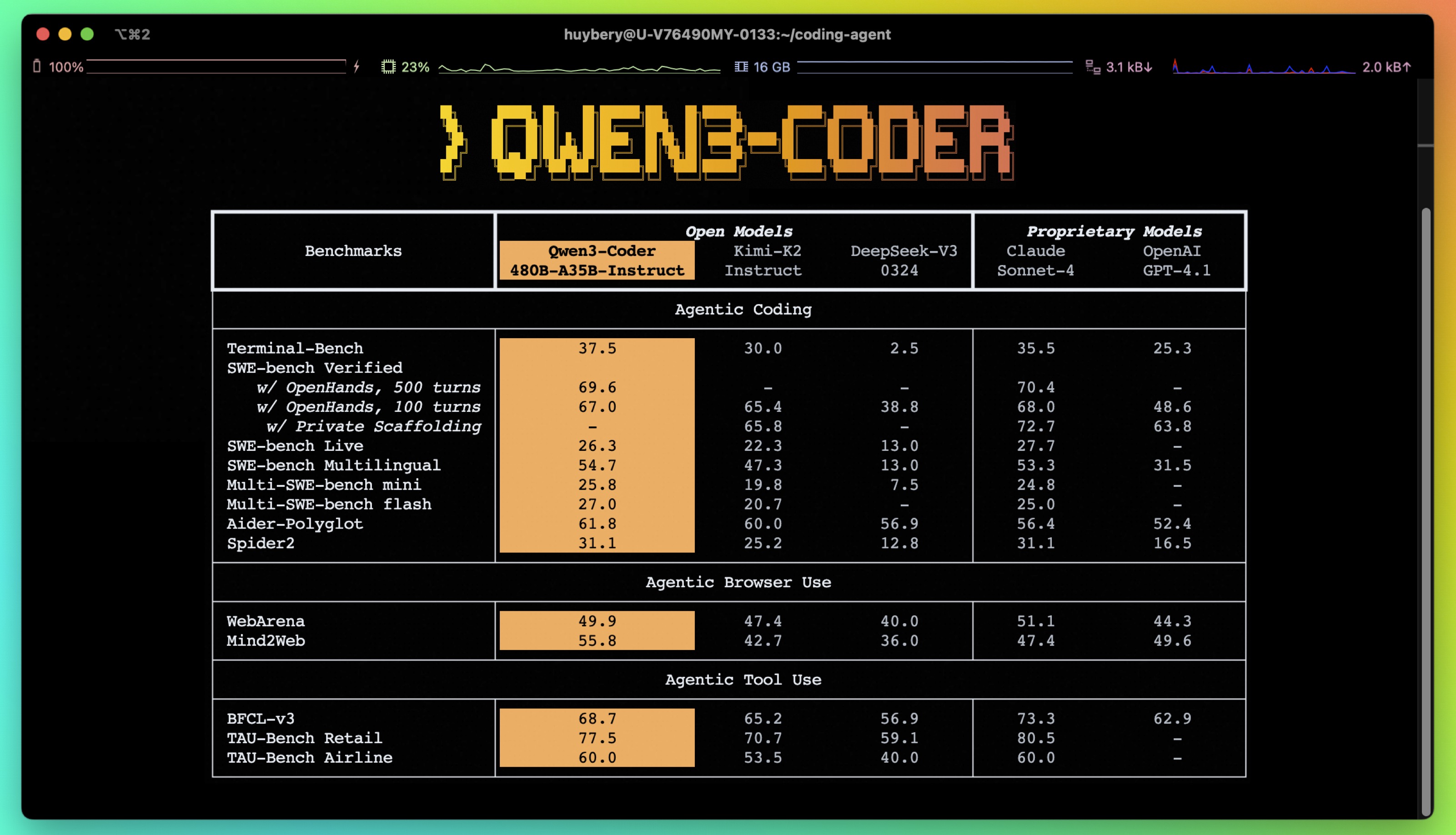

Alibaba Releases Qwen3‑Coder, a 480B-Parameter Open-Source Model for Autonomous Coding

The Qwen team at Alibaba has launched Qwen3‑Coder, a massive open-source AI model designed for complex coding workflows. With 480 billion parameters and support for a 1 million token context window, the model challenges proprietary leaders like Claude Sonnet 4 while remaining freely available under Apache 2.0.

July 21, 2025