Alibaba’s Qwen3‑235B Instruct Model Gets Major Upgrade With Longer Context and Sharper Reasoning

Alibaba Cloud has released a refined version of its flagship Qwen3‑235B model, specifically tuned for instruction-following tasks. The update separates the non-thinking mode, expands context length up to 1 million tokens, and delivers noticeable gains in reasoning, coding, and alignment benchmarks. The move simplifies deployment decisions for developers while solidifying Qwen’s standing in the open-source large language model arena.

Alibaba Cloud has released an upgraded version of its largest open-source language model, Qwen3‑235B, designed to improve instruction-following performance across a range of AI tasks. Dubbed Qwen3‑235B‑A22B‑Instruct‑2507, the new release isolates the non-thinking mode from its hybrid predecessor. This follows community feedback favoring clearer task specialization in large-scale Mixture of Experts (MoE) models. The model features 256,000-token context length with extrapolation to 1 million tokens, addressing long-context use cases without sacrificing instruction quality.

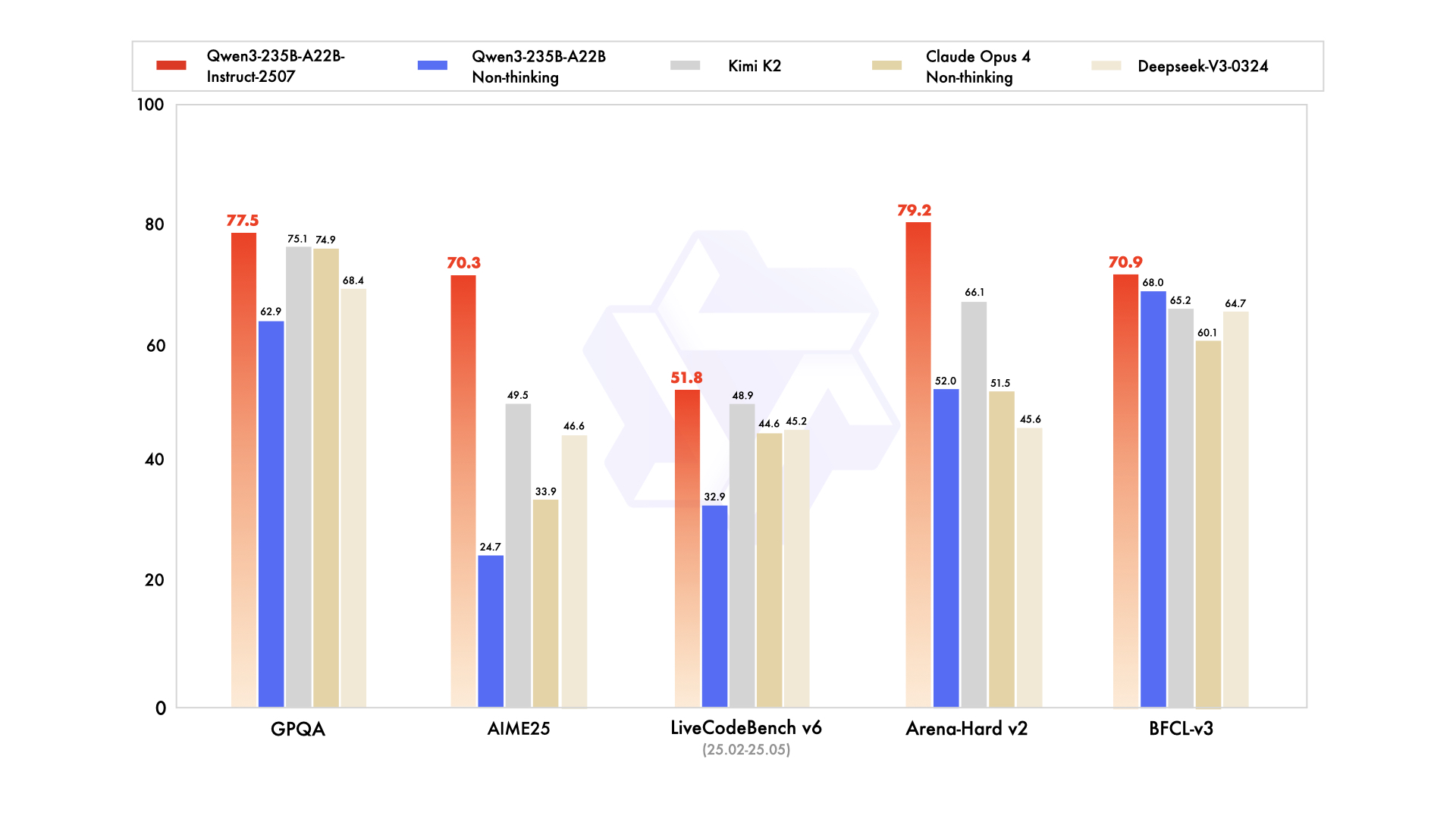

Benchmarks published by Alibaba’s Junyang Lin show across-the-board improvements in reasoning, coding, alignment, and agent use cases when compared to previous versions. The separation of thinking and non-thinking modes is intended to streamline deployment choices for developers by offering dedicated pathways for retrieval-augmented generation, reasoning agents, or high-quality completions.

The update arrives at a time when open-source LLM projects are under pressure to compete with rapid advancements in proprietary models. By doubling down on specialized instruction-tuned releases, Alibaba is positioning Qwen as a credible alternative for enterprise AI developers and academic researchers who prioritize transparency and long-context tasks. The new model is available on Hugging Face, reaffirming Qwen’s commitment to openness in model access and experimentation.

Pure Neo Signal:

We love

and you too

If you like what we do, please share it on your social media and feel free to buy us a coffee.