Alibaba’s Qwen Team Unleashes Qwen-MT, Teases Wan 2.2 in Breakneck Model Rollout

Qwen‑MT brings instruction-tuned translation across 92 languages, built on Qwen3. With daily model drops and Wan 2.2 on the horizon, Qwen is pushing a relentless pace in open AI development.

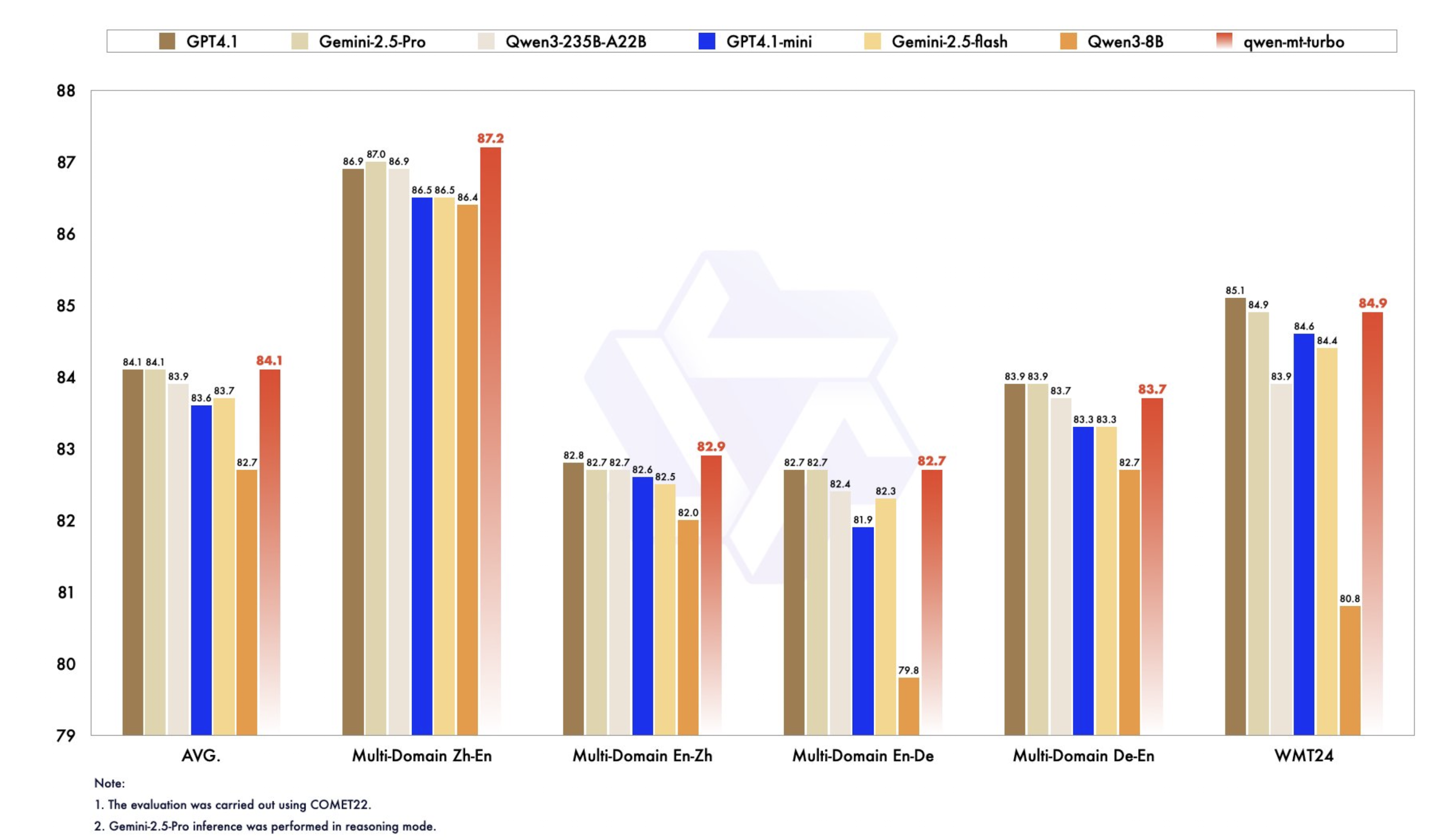

Alibaba's Qwen team has added yet another model to its expanding lineup. Qwen‑MT is a multilingual machine translation model based on the Qwen3 architecture, covering 92 languages with variants optimized for both instruction-following and real-time translation. The release includes a Turbo version and demonstrates performance surpassing traditional translation benchmarks like FLORES-101.

This is Alibaba’s third major model drop in a week, following Qwen3‑Coder and a Thinking variant. The team also confirmed that its next-gen video model, Wan 2.2, is coming soon. That puts Qwen on a model-a-day pace, signaling a rapid open-source counter to proprietary LLM platforms.

Qwen‑MT reflects Alibaba’s push to build modular, interoperable models tuned for real-world use. For global businesses, this means access to an open translation engine with strong instruction-following and long-context capabilities. For developers and AI builders, Qwen’s pace is a signal: the frontier is open, and moving fast.

Pure Neo Signal:

We love

and you too

If you like what we do, please share it on your social media and feel free to buy us a coffee.