Wan 2.2 Debuts as Fully Open-Source, Multimodal Video Model

Text-to-video, image-to-video, and hybrid input modes now run locally at 720p and 24 fps. The Alibaba Wan team has open-sourced Wan 2.2 under Apache 2.0 and integrated it into ComfyUI and Hugging Face on launch day.

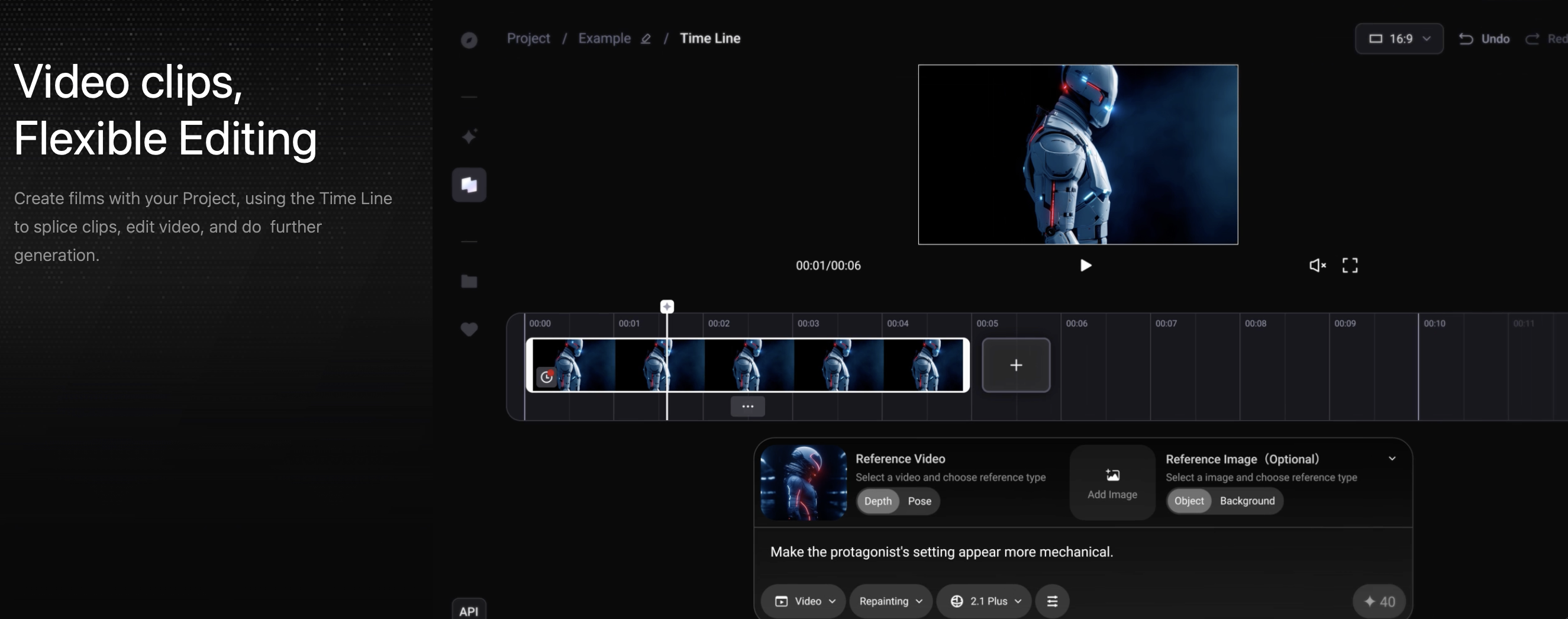

Wan 2.2 is the latest release from Alibaba’s Wan team. It builds on its predecessor with expanded capabilities and a fully open-source license. The model supports three generation modes: T2V, I2V, and TI2V. It comes in two architecture sizes. A dual-noise expert MoE system boosts cinematic visual quality, improves complex motion, and enhances semantic control. The TI2V-5B model runs efficiently on just 8 GB of VRAM. The 14B variants require more resources but offer deeper generation fidelity and can operate with offload support.

Accessibility is a central feature of this release. Wan 2.2 is available from day one via ComfyUI and Hugging Face Diffusers. It can generate 720p video at 24 frames per second from either text, images, or a combination of both. MacBook Pro users with an M4 Max and 128 GB unified memory can run the models locally. This eliminates the need for offloading or memory workarounds and enables high-fidelity generation directly from a laptop.

The open-source release under Apache 2.0 marks a strategic shift. Wan 2.2 now ranks among the most permissively licensed, high-quality video generation models available for local use. This invites integration into enterprise-grade pipelines, creative software, and no-code development stacks. It also avoids the high VRAM constraints typical of similar models.

Wan 2.2 sets a new local benchmark for AI-powered video creation. Its combination of input flexibility, runtime efficiency, and open licensing positions it as a leading tool for both indie creators and advanced AI production workflows.

Pure Neo Signal:

Sure, Veo 3 spits out crisp 1080p with sound. And Runway’s Aleph has the kind of diffusion engine that makes Hollywood sweat. But here’s the fine print: one locks you into YouTube-tier license terms, the other comes with an invoice and your GPU's soul. Meanwhile, Wan 2.2 just showed up, open-sourced, multimodal, and running 720p on your MacBook without crying for VRAM. That’s not a gimmick. That’s a signal. If the future of AI video is “local, fast, and free,” Alibaba just gave it a working prototype.

We love

and you too

If you like what we do, please share it on your social media and feel free to buy us a coffee.