August 26, 2025

Google debuts Gemini Nano-Banana image editing with lower cost than OpenAI

Google has upgraded its Gemini image editing model, now named Gemini 2.5 Flash Image. The model improves likeness preservation and consistency across edits while undercutting OpenAI’s average image generation cost. Pricing positions Gemini as a lower-cost option for developers and enterprises focused on high-volume content workflows.

August 14, 2025

Google adds automatic memory and temporary chat controls to Gemini

Google has begun rolling out automatic memory in Gemini AI, allowing the assistant to remember details from past interactions by default. The update also introduces a “Temporary Chat” mode that does not store or use conversations for training and expires after 72 hours. The changes aim to balance personalization with stronger privacy controls.

August 14, 2025

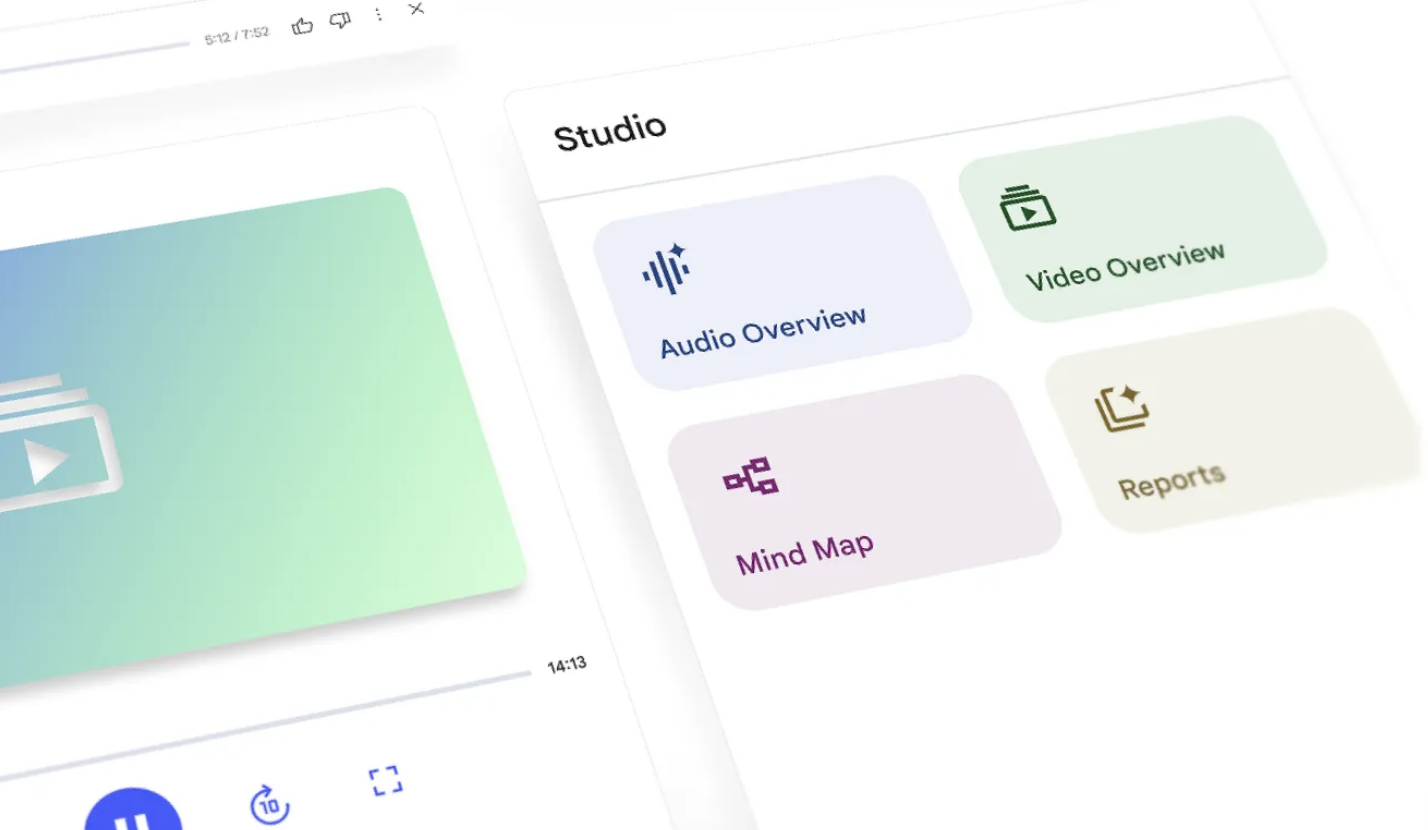

Google tests Magic View in NotebookLM as potential new data visualization feature

Google is trialing a new experimental feature in NotebookLM called Magic View. The tool displays an animated, dynamic canvas when activated, resembling a generative simulation. While the exact function is unconfirmed, early indications suggest it could provide new ways to visualize and interact with notebook content. The development follows recent updates to NotebookLM that expand multimedia support, including video overviews and mind maps.

August 14, 2025

Google releases Gemma 3 270M, an ultra-efficient open-source AI model for smartphones

Google DeepMind has released Gemma 3 270M, a compact 270 million-parameter model designed for instruction following and text structuring. The open-source model is optimized for low-power hardware, including smartphones, browsers, and single-board computers. Its efficiency enables AI capabilities in privacy-sensitive and resource-constrained environments.

August 12, 2025

Google makes Jules, its AI coding agent, generally available

Google has transitioned Jules from Google Labs beta to full public release. Powered by Gemini 2.5 Pro, Jules runs asynchronously in a cloud VM to read, test, improve, and visualize code with minimal developer oversight. The launch adds a free tier alongside paid “Pro” and “Ultra” plans and introduces a critic capability that flags issues before changes are submitted.

August 5, 2025

DeepMind Debuts Genie 3 for Real-Time Text-to-3D World Generation

A new generation of world models is here. DeepMind’s Genie 3 turns text prompts into playable 3D environments at 24 fps with persistent memory and interactive elements. While still in research preview, it represents a major step toward AI agents that can learn and act in open-ended virtual worlds.

July 29, 2025

Google Adds Video Overviews and Smarter Studio Tools to NotebookLM

NotebookLM users can now generate narrated video summaries and work across media formats in one unified research space. Google’s latest updates aim to streamline how users synthesize, visualize, and present complex information.

July 25, 2025

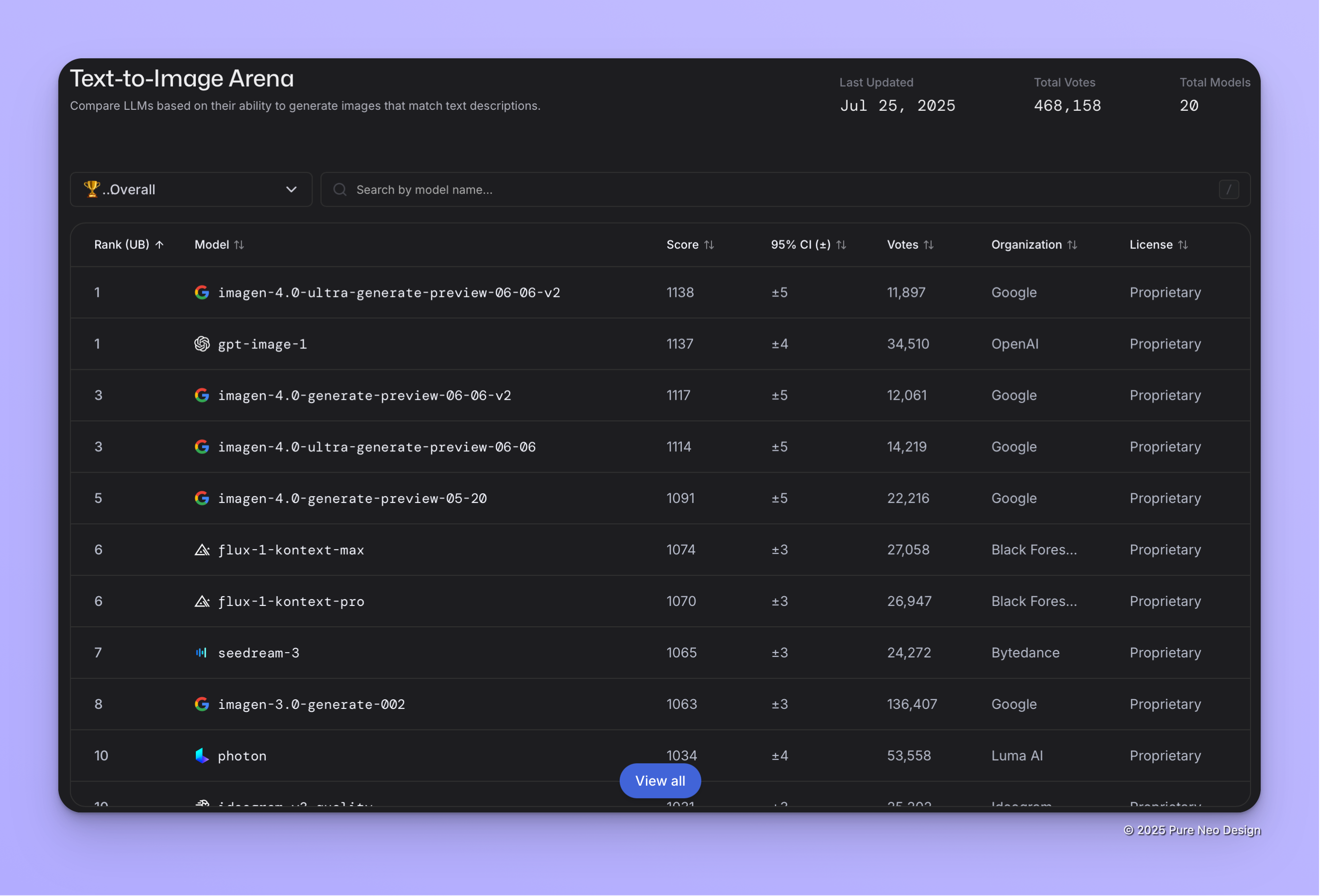

Google DeepMind’s Imagen 4.0 Models Join LMArena Text-to-Image Leaderboard

Google DeepMind’s latest Imagen 4.0 models have officially landed on the LMArena Text-to-Image leaderboard. The July 26 update benchmarks two new variants of Imagen 4.0 against top open and closed-source models. This provides a rare, head-to-head performance view for researchers and developers watching the generative image space evolve.

July 19, 2025

Google’s Veo 3 Brings Sound-On Video Generation to Gemini API

The latest version of Google’s video model, Veo 3, is now available via the Gemini API and Vertex AI. It can generate short, cinematic videos with synchronized audio including dialogue and sound effects. This marks Google’s first generative model to combine visuals and sound, expanding its capabilities in AI-powered content creation.

July 12, 2025

Google Rolls Out Gemini Embedding Model for RAG and Multilingual NLP

Google has launched its new Gemini Embedding model, gemini-embedding-001, offering high-dimensional, multilingual semantic embeddings optimized for retrieval-augmented generation (RAG) and natural language tasks. With top benchmark scores and competitive pricing, it aims to set a new standard for embedding APIs.

July 25, 2025

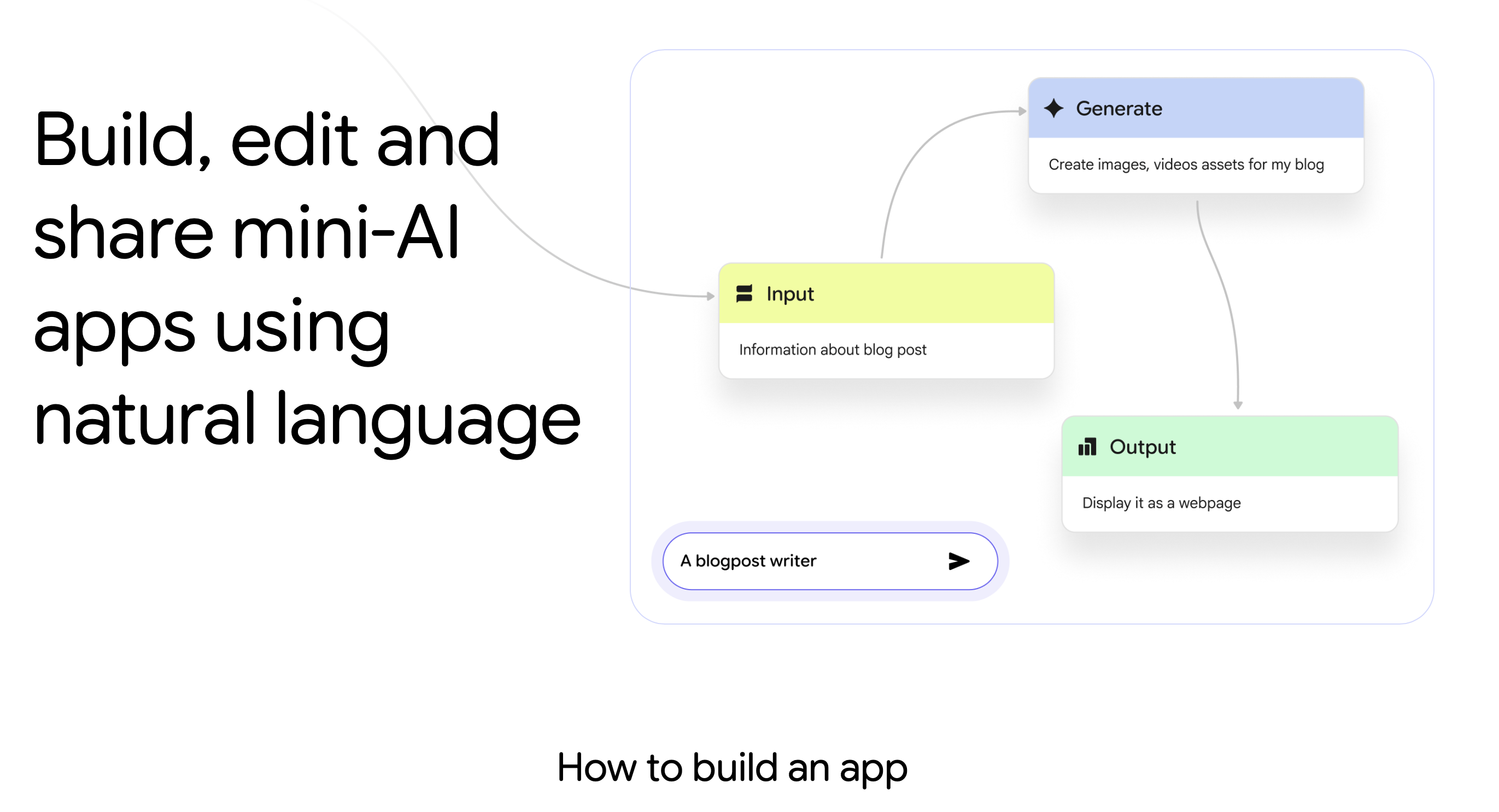

Google Tests Opal, an AI-Powered “Vibe Coding” App for UI Creation

Opal is a new experimental tool from Google that turns natural language prompts into interface designs. The app is designed to let users describe a UI using casual language or mood-based phrases, then watch Opal generate functional components in real-time. Google positions Opal as a creative assistant for early-stage prototyping, aiming to make design workflows more accessible to non-coders.

July 21, 2025

Google Adds Conversational Image Segmentation to Gemini 2.5

Gemini 2.5 now supports natural-language image segmentation, letting developers query images with plain text prompts. The feature understands complex relationships, conditional logic, and multilingual queries, streamlining visual AI workflows without custom models. It is available through Google AI Studio and the Gemini API, targeting creative, compliance, and insurance use cases.

July 19, 2025

Google Expands Gemini with Monthly ‘Drops’ and Smarter AI Features

Google is rolling out its July Gemini Drops, a new monthly update program that delivers fresh AI features and usage tips. This month’s release introduces Veo 3 for photo-to-video conversion, Gemini on smartwatches, improved scheduling, and performance boosts with Gemini 2.5 Pro. The move signals Google’s push to make Gemini a more proactive and creative everyday assistant.

July 7, 2025

Google Adds Batch Mode to Gemini API for Cheaper, Scalable AI Jobs

Google has launched a new Batch Mode for its Gemini API, targeting developers with high-volume, non-urgent AI workloads. The new asynchronous endpoint allows users to process large batches of prompts at half the cost of the synchronous API. It also supports 2 GB JSONL files and advanced features like context caching and integrated tools. This update makes Gemini more viable for enterprises needing affordable, scalable AI infrastructure.

July 2, 2025