Ollama Adds Desktop App and Performance Upgrades in v0.10.0

The latest Ollama update brings a native desktop app for macOS and Windows, along with CLI and multi-GPU performance enhancements. It's a move aimed at making local LLM deployment more accessible and efficient across devices.

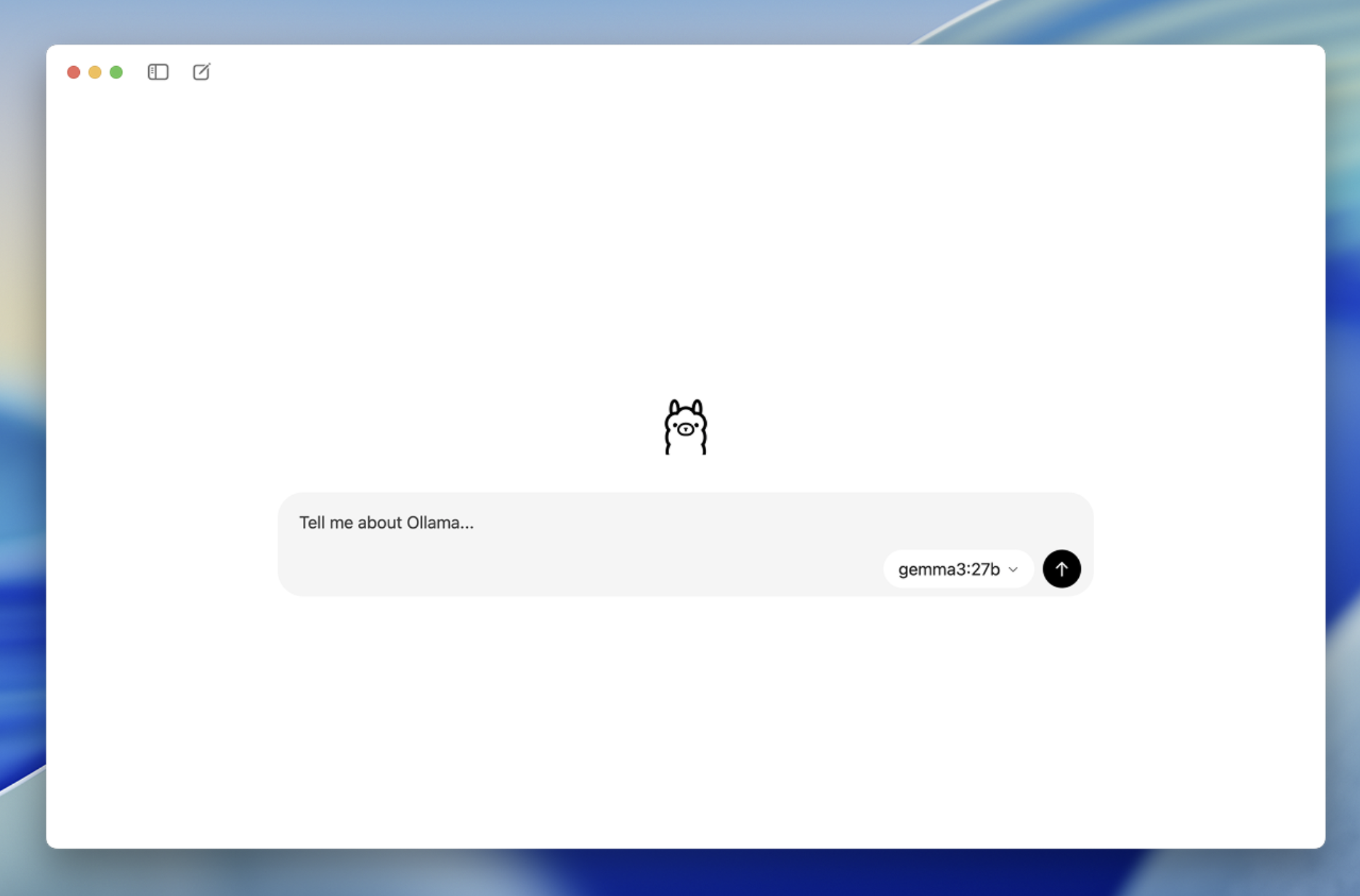

Ollama has released version 0.10.0, introducing a GUI desktop app for both macOS and Windows. The app complements the CLI-based workflow and marks the project’s first major push toward a more user-friendly interface for local large language models. Alongside the desktop app, the release includes several developer-focused improvements such as improved multi-GPU performance, richer observability through ollama ps, and better error reporting in commands like ollama show.

The new version also fixes model-specific issues for granite3.3 and mistral-nemo, adds WebP image support to the OpenAI-compatible API, and adjusts the default parallel request handling to reduce resource contention. These updates aim to reduce friction for users deploying and experimenting with LLMs on local machines.

By adding a graphical user interface, Ollama is positioning itself as a more approachable entry point for AI developers and hobbyists, particularly on Windows, where CLI workflows have historically seen lower adoption. At the same time, the under-the-hood upgrades strengthen its appeal to power users running multi-model environments or GPU-intensive workflows. This release keeps Ollama competitive in the fast-evolving local LLM toolchain landscape.

Pure Neo Signal:

We love

and you too

If you like what we do, please share it on your social media and feel free to buy us a coffee.