NVIDIA Releases Llama Nemotron Super v1.5 to Push Open-Source Agent Reasoning

The new 49B model tops open benchmarks with a 128K context window, tool-use capabilities, and single‑GPU efficiency. It's a signal that NVIDIA aims to lead in agent‑focused LLMs that actually run in production.

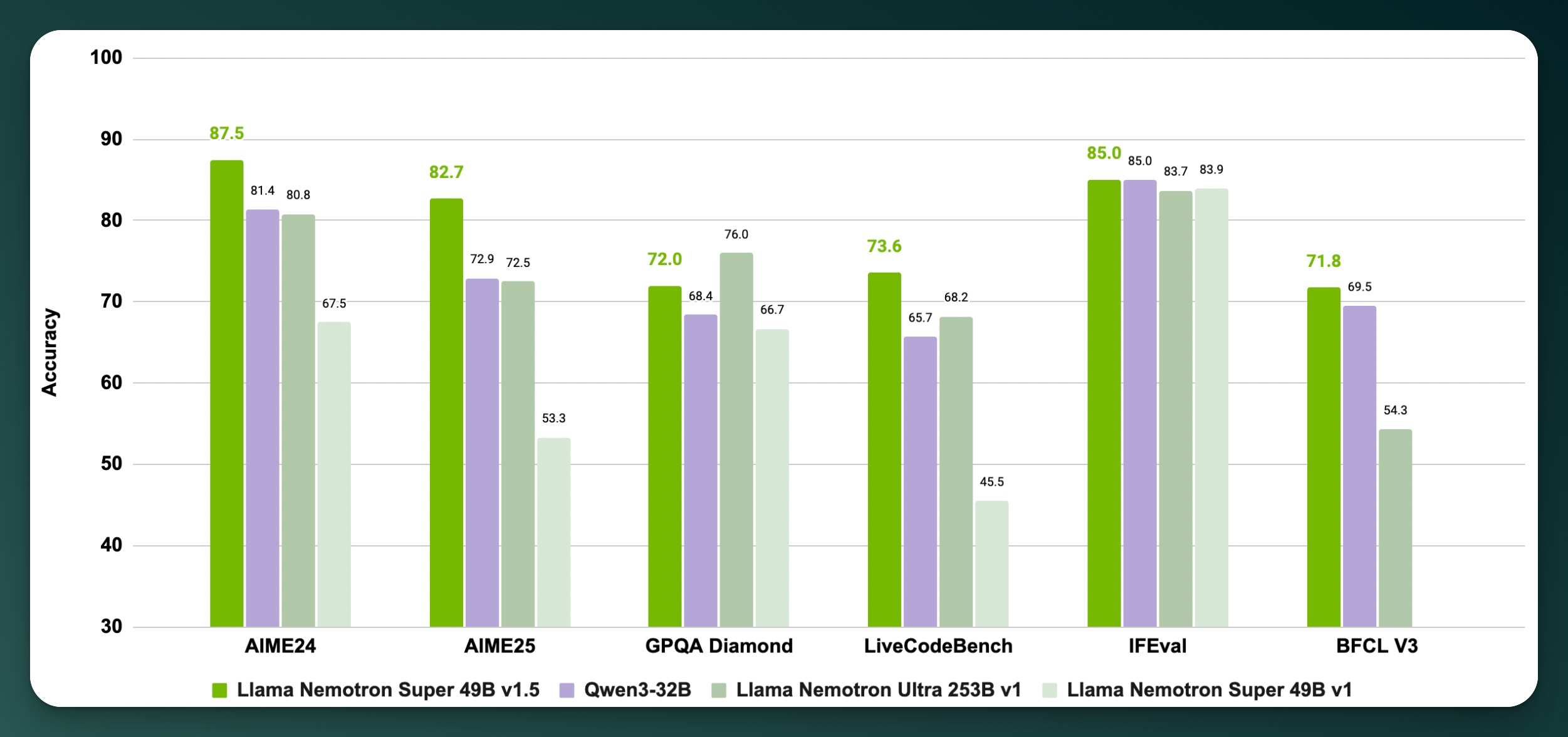

NVIDIA has released Llama‑3.3‑Nemotron‑Super 49B v1.5, an open-weight LLM designed to deliver top-tier reasoning, math, and tool-calling performance at a mid-size model scale. The model outperforms leading open competitors like Qwen3‑235B, DeepSeek R1‑671B, and even NVIDIA’s own prior Nemotron Ultra 253B across key reasoning benchmarks. Despite its smaller footprint, it features a 128K token context window and excels at multi-turn reasoning tasks.

What sets v1.5 apart is its combination of size and accessibility. It was built using neural architecture search (NAS) to optimize for H100/H200 GPUs, meaning it runs efficiently on a single high-end card. This lowers the barrier for developers building RAG agents, math solvers, and code assistants in real-world applications. Alongside the model, NVIDIA has released the full post-training dataset used for alignment and reasoning tuning, a move that enhances transparency and reproducibility in commercial deployments.

In a field increasingly dominated by massive, inaccessible models, NVIDIA is positioning Nemotron Super v1.5 as the pragmatic choice for agentic system developers. It's not just a benchmark leader. It's designed to work in actual production environments, with open weights, permissive licensing, and GPU efficiency that SMBs and startups can use today.

Pure Neo Signal:

We love

and you too

If you like what we do, please share it on your social media and feel free to buy us a coffee.