GPT‑OSS: OpenAI Publishes 20B and 120B Open‑Weight Models for Local Deployment

OpenAI has released gpt‑oss‑120b and gpt‑oss‑20b, its first open‑weight models since GPT‑2. The models match or exceed the performance of proprietary counterparts and mark a rare moment of open source leadership from a U.S.-based AI lab. With support for tool use, chain‑of‑thought reasoning, and smooth MacBook deployment, gpt‑oss is designed for full local control.

OpenAI has launched two new open-weight models—gpt‑oss‑120b and gpt‑oss‑20b—under an Apache 2.0 license. The move breaks a five-year drought in U.S. open releases at this level of scale and quality. Both models can be fine-tuned and deployed locally or via major platforms including Hugging Face, AWS, Azure, and Databricks. The 120b model contains roughly 117 billion parameters (with 5.1 billion active) and runs on a single 80 GB H100 GPU. The smaller 20b variant fits in 16 GB of memory.

The release comes after weeks dominated by China's open-source leaders, including DeepSeek-VL, Qwen2, and Kimi 2.0. Until now, U.S. labs lagged in making high-quality open models available. With gpt‑oss, OpenAI re-enters the open-source scene by releasing a model that not only competes but in some areas outperforms the best available. It’s a notable shift in momentum in the global race for open AI infrastructure.

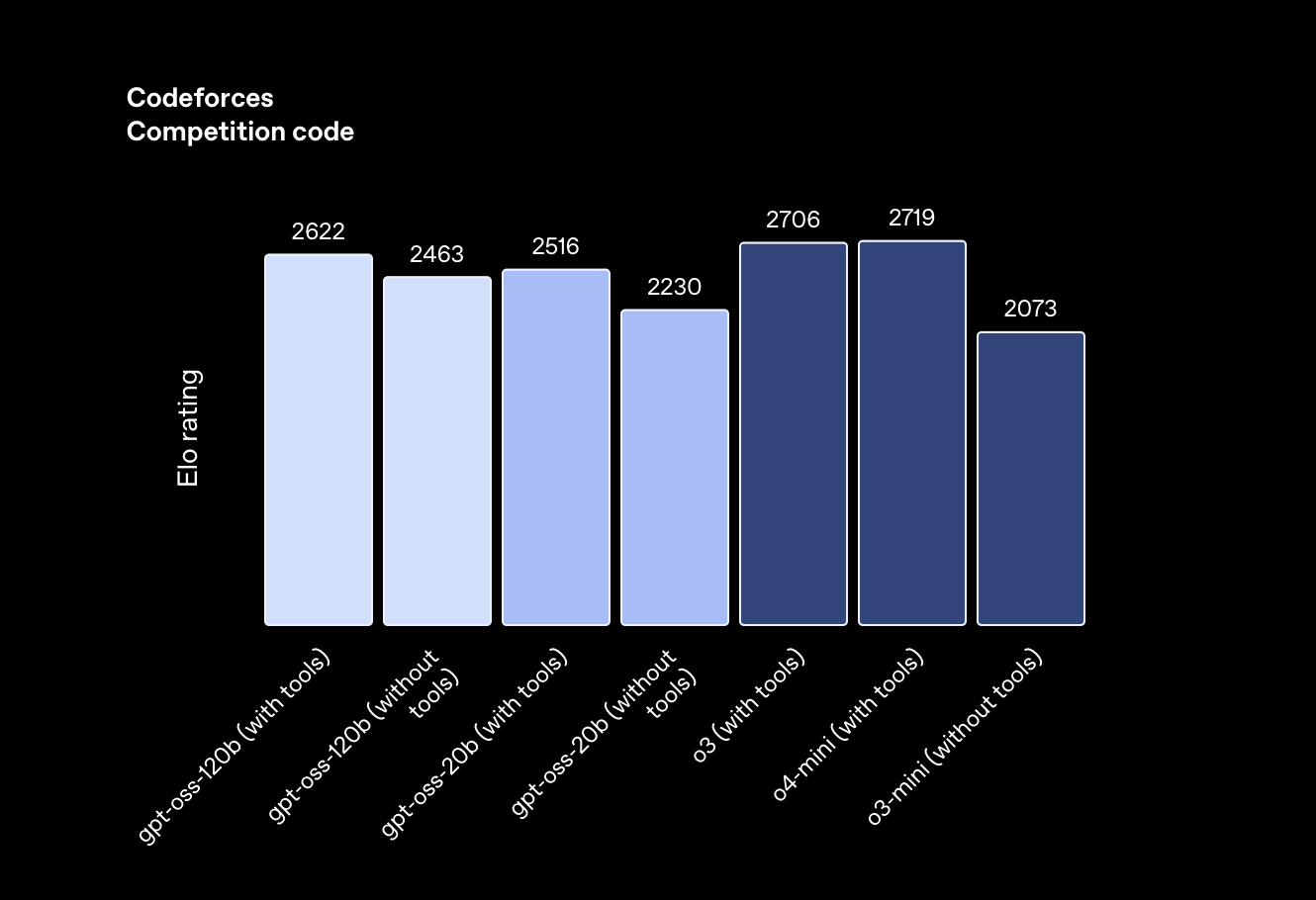

Benchmark results shared by OpenAI show that gpt‑oss‑120b outperforms o4‑mini, a proprietary model, on tasks like MMLU, Codeforces, and HealthBench. The 20b model also competes strongly, exceeding o3‑mini across several metrics. The models support agentic use cases, configurable reasoning effort, and chain-of-thought prompting, making them well-suited for developers building autonomous systems or local copilots.

For Apple users, there’s another reason to pay attention. MLX-optimized builds of both models are already available, enabling smooth inference on Apple Silicon Macs. The models run efficiently even on consumer MacBooks, making gpt‑oss a practical foundation for desktop-based LLM applications. This lowers the barrier for indie devs and researchers who want fine-grained control without relying on cloud APIs.

Unlike most closed-source commercial models, gpt‑oss is designed for modification. The full training recipe is not included, but OpenAI has published a detailed model card, parameter counts, architecture notes, and fine-tuning tools. Safety measures include evaluations by a third-party red-teaming vendor and red-teaming API interface, plus alignment via reinforcement learning and supervised fine-tuning.

OpenAI says the release is meant to support safety research, transparency, and broader access. It follows the launch of o4 in June and may reflect internal tension between OpenAI’s closed commercial roadmap and its original open science roots. By releasing performant open-weight models now, OpenAI also sets a benchmark that could pressure others—particularly Meta and Anthropic—to follow suit.

While not full end-to-end reproducibility, gpt‑oss offers what many researchers and startups have asked for: a U.S.-backed, high-performance model that can be studied, deployed, and adapted without license restrictions. It may not mark a total return to openness, but it’s a meaningful step toward rebuilding trust and enabling local AI development at scale.

Pure Neo Signal:

We love

and you too

If you like what we do, please share it on your social media and feel free to buy us a coffee.